An Open Source Investigation into the Future Of Life Institute

EA-Funded. EA-Led. But not EA-affiliated?

This post came about because of an email that I sent to the press office of the Future of Life Institute, wherein I introduced myself as the author of Inside Cyber Warfare, and that I was writing the third edition with a chapter on AI Risk.

"During the course of my research over the past year, I've observed that a vast majority of the research in the area of AI and existential risk has come from people affiliated with Open Philanthropy, CFAR, the AI Alignment Forum, LessWrong, and the Rationalism movement.

"CFAR, for example, promotes the fact that four out of five of your organization's founders are graduates of CFAR (Center For Applied Rationality).

"Can you tell me what the relationship is between FTI and CFAR or any of its affiliated organizations and/or the Rationalist movement?"

Someone named "Ben" (presumably Ben Cumming, their Communications Director) responded:

"The Future of Life Institute has no institutional or official relationship with CFAR."

That answer struck me as too carefully worded, so I thought I'd dig deeper.

In the Institute's FAQ, I found this:

Is FLI a longtermist or Effective Altruist (EA) organization?

No, FLI is not affiliated with the Effective Altruist movement or longtermism.

Either the Future of Life Institute has abandoned its roots in EA and Longtermism, or it's being deliberately obtuse to avoid the terrible press (see here, here, and here) that EA has received ever since the OpenAI "Thanksgiving Massacre" last year when the OpenAI board (with at least two EA members) and Sam Altman fired each other.

The Center For Applied Rationality (CFAR)

In July 2016, OP made a grant of $1,035,000 to the Center for Applied Rationality (CFAR):

"CFAR is an organization that runs workshops aimed at helping participants avoid known cognitive biases, form better mental habits, and thereby make better decisions. Our primary interest in these workshops is that we believe they introduce people to and/or strengthen their connections with the effective altruism (EA) community. CFAR is particularly interested in growing the talent pipeline for work on potential risks from advanced artificial intelligence (AI). More on our interest in supporting work along these lines is here."

Regarding CFAR and the Future of Life Institute, Open Philanthropy had this to say:

"Conversations with CFAR and comments by Max Tegmark (FLI's President and Co-Founder) suggest to us that CFAR’s workshops played a significant role in the founding of the Future of Life Institute (which we have supported in 2015 and 2016)."

Tegmark reportedly said "CFAR was instrumental in the birth of the Future of Life Institute: 4 of our 5 co-founders are CFAR alumni, and seeing so many talented idealistic people motivated to make the world more rational gave me confidence that we could succeed with our audacious goals.”

CFAR itself uses FLI as one of its case studies on its Rationality.org website.

"Victoria (Krakovna) attended a proto-CFAR workshop in 2011, which contributed to her becoming heavily involved in the growing Boston rationality community, which led to her meeting FLI co-founders Jaan Tallinn and Max Tegmark and becoming part of the team that launched FLI."

"Three other FLI co-founders (Jaan Tallinn, Max Tegmark, Meia Chita-Tegmark) attended the March 2013 CFAR workshop and connected with the Boston rationality community in the fall of 2013. Jaan, Max, and FLI co-founder Anthony Aguirre had previously collaborated on the 2011 Foundational Questions Institute conference, and in 2013 they and Meia were interested in doing something more about existential risk."

Open Philanthropy's Funding Record of FLI

In January 2-5, 2015, FLI organized a conference on AI and existential risk in Puerto Rico. It was a who's who of Effective Altruists, Longtermists, and AI/Existential Risk philosophers and scientists including Elon Musk, Nick Bostrom, Jaan Tallinn, Stuart Russell, Demis Hassabis, Eliezer Yudkowsky, and many others. Afterward, Musk announced a $10M donation to FLI to use "to keep AI beneficial to humanity."

Later in 2015, Open Philanthropy offered to support FLI by contributing $1,186,000 as an addition to Musk's $10M.

In March 2016, OP decided to award FLI the sum of $100,000, which is quite a bit less than what FLI said it needed. The OP report accompanying this grant is illuminating:

"(W)e believe it is fairly likely (but still uncertain) that FLI would be able to raise most or all of the funds it requires from other donors. We expect that these donors would largely have similar values and priorities to us (e.g. donors from the effective altruism community), and are therefore not overly concerned by this possibility. With this grant (of $100,000), we expect FLI to be highly likely to raise the funds it requires."

OP went on to award annual grants of $100,000 in 2017 and 2018, $250,000 in 2019, and $176,000 in 2020, yet none of these grants are mentioned on FLI's Wikipedia page. In fact, there's no mention of Open Philanthropy or Effective Altruism at all.

In July 2021, Vitalik Buterin gave them $665M in Shiba Inu crypto. No further grants from OP were forthcoming.

SUMMARY

For reasons unknown, the Future of Life Institute doesn't want to be associated with Effective Altruism even though its President and its Board have repeatedly been involved with EA and CFAR over the years, and almost all the organization's funding has come from Effective Altruists.

The Future Of Life website warns that "more than half of AI experts believe there is a one in ten chance this technology will cause our extinction" so even though FLI has denied its roots, its core message hasn't changed, and that message (which many including myself believe is seriously flawed) is a key part of its lobbying effort on Capital Hill; an effort that is gaining traction through massive funding and sheer repetition.

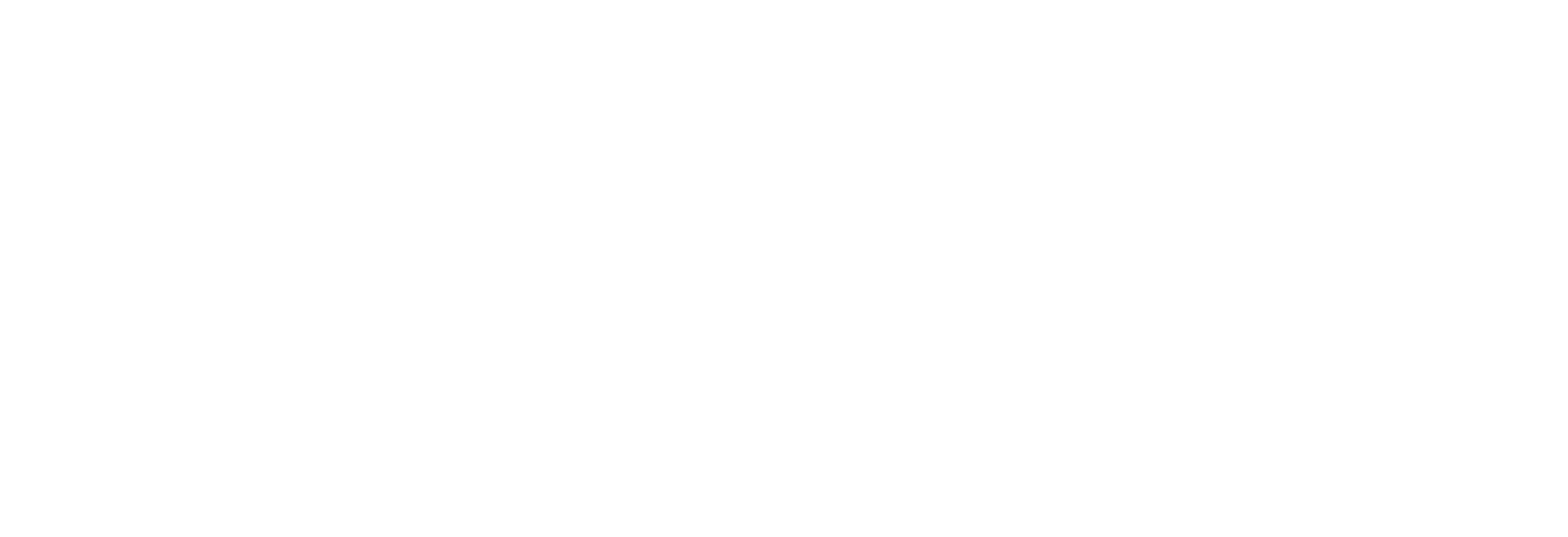

THE OP/EA MIND MAP

You might have noticed that FLI is just a small part of the mind map that I posted at the top of this article. I intend to explore each part of that map in future articles, as well as expand the map to include new entities in the AI and Existential Risk camp.

I want to express my gratitude to the many AI philosophers and scientists that I've spoken to over the course of my research that have privately expressed their frustration with this well-financed apocalyptic movement whose work is stealing time and resources away from real threats in favor of hugely speculative ones.

I'll do my best to give their concerns a voice in this newsletter. If you'd like to help, please subscribe and share this newsletter with others.

For Additional Reading

"The Deaths of Effective Altruism", Leif Wenar, Wired, March 27, 2024

"When Silicon Valley's AI Warriors Came To Washington: A Special Report", Brendan Bordelon, Politico, December 30, 2023

"What is Going On with Effective Altruism", Sophia C. Scott and Bea Wall-Feng, The Harvard Crimson, March 30, 2023

Member discussion